How millions of people can watch the same video at the same time – a computer scientist explains the technology behind streaming

- Streaming technology enables millions of people to watch the same video at the same time, with the men’s cricket World Cup final match between Australia and India reaching a peak of 59 million concurrent streaming viewers.

- The defining aspect of streaming is its on-demand nature, allowing users to access real-time and on-demand content worldwide, as seen in the global reach of Joe Rogan podcast episodes or live coverage of SpaceX Crew Dragon spacecraft launches.

- Video streaming poses significant challenges, including massive video data sizes, adaptive quality for different devices and internet capabilities, and geographic latency and network congestion issues when delivering content to a large number of users simultaneously.

- Content delivery networks (CDNs) have emerged as a cornerstone of modern streaming, distributing content through globally scattered points of presence that reduce latency and improve reliability, with strategies like “Enter Deep” and “Bring Home” optimizing video streaming by minimizing delays and bandwidth waste.

- The rapid expansion of the internet and surge in video streaming require sophisticated solutions to handle massive amounts of video data, reducing geographic latency, and accommodating varying user devices and internet speeds, highlighting the innovative approaches needed to meet the expectations of a connected world.

Live and on-demand video constituted an estimated 66% of global internet traffic by volume in 2022, and the top 10 days for internet traffic in 2024 coincided with live streaming events such as the Jake Paul vs. Mike Tyson boxing match and coverage of the NFL. Streaming enables seamless, on-demand access to video content, from online gaming to short videos like TikToks, and longer content such as movies, podcasts and NFL games.

The defining aspect of streaming is its on-demand nature. Consider the global reach of a Joe Rogan podcast episode or the live coverage of the SpaceX Crew Dragon spacecraft launch – both examples demonstrate how streaming connects millions of viewers to real-time and on-demand content worldwide.

I’m a computer scientist whose research includes cloud computing, which is the distribution of computing resources such as video servers across the internet.

‘Chunks’ of video

When it comes to video content – whether it’s a live stream or a prerecorded video – there are two major challenges to address. First, video data is massive in size, making it time-consuming to transmit from the source to devices such as TVs, computers, tablets and smartphones.

Second, streaming must be adaptive to accommodate differences in users’ devices and internet capabilities. For instance, viewers with lower-resolution screens or slower internet speeds should still be able to watch a given video, albeit in lower quality, while those with higher-resolution displays and faster connections enjoy the best possible quality.

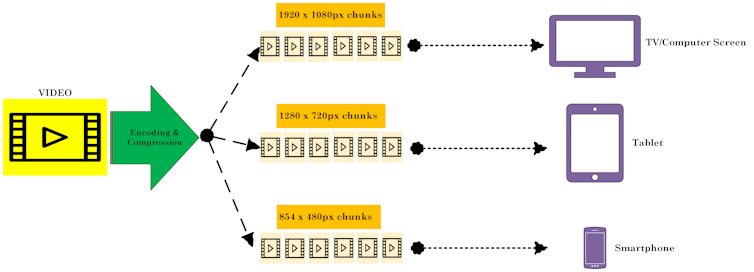

To tackle these challenges, video providers implement a series of optimizations. The first step involves fragmenting videos into smaller pieces, commonly referred to as “chunks.” These chunks then undergo a process called “encoding and compression,” which optimizes the video for different resolutions and bitrates to suit various devices and network conditions.

When a user requests an on-demand video, the system dynamically selects the appropriate stream of chunks based on the capabilities of the user’s device, such as screen resolution and current internet speed. The video player on the user’s device assembles and plays these chunks in sequence to create a seamless viewing experience.

For users with slower internet connections, the system delivers lower-quality chunks to ensure smooth playback. This is why you might notice a drop in video quality when your connection speed is reduced. Similarly, if the video pauses during playback, it’s usually because your player is waiting to buffer additional chunks from the provider.

Chetan Jaiswal

Dealing with distance and congestion

Delivering video content on a large scale, whether prerecorded or live, poses a significant challenge when extrapolated to the immense number of videos consumed globally. Streaming services like YouTube, Hulu and Netflix host enormous libraries of on-demand content, while simultaneously managing countless live streams happening worldwide.

A seemingly straightforward approach to delivering video content would involve building a massive data center to store all the videos and related content, then streaming them to users worldwide via the internet. However, this method isn’t favored because it comes with significant challenges.

One major issue is geographic latency, where a user’s location relative to the data center affects the delay they experience. For instance, if a data center is located in Virginia, a user in Washington, D.C., would experience minimal delay, while a user in Australia would face much longer delays due to the increased distance and the need for the data to traverse multiple interconnected networks. This added travel time slows down content delivery.

Another problem is network congestion. As more users worldwide connect to the central data center, the interconnecting networks become increasingly busy, resulting in frustrating delays and video buffering. Additionally, when the same video is sent simultaneously to multiple users, duplicate data traveling over the same internet links wastes bandwidth and further congests the network.

A centralized data center also creates a single point of failure. If the data center experiences an outage, no users can access their content, leading to a complete service disruption.

Content delivery networks

To address these challenges, most content providers rely on content delivery networks. These networks distribute content through globally scattered points of presence, which are clusters of servers that store copies of high-demand content locally. This approach significantly reduces latency and improves reliability.

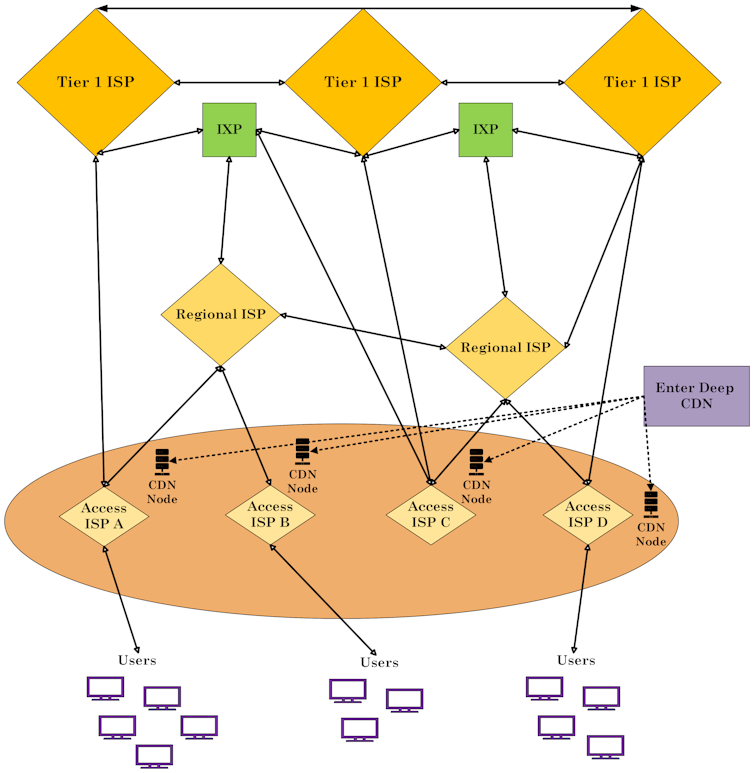

Content delivery network providers, such as Akamai and Edgio, implement two main strategies for deploying points of presence.

The first is the “Enter Deep” approach, where thousands of smaller point-of-presence nodes are placed closer to users, often within internet service provider networks. This ensures minimal latency by bringing the content as close as possible to the end user.

Chetan Jaiswal

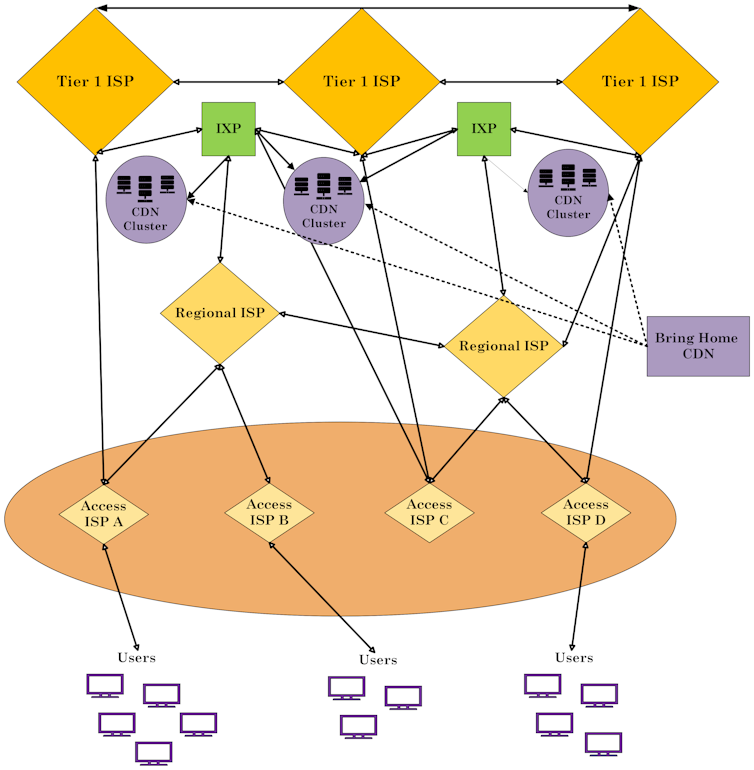

The second strategy is “Bring Home,” which involves deploying hundreds of larger point-of-presence clusters at strategic locations, typically where ISPs interconnect: internet exchange points. While these clusters are farther from users than in the Enter Deep approach, they are larger in capacity, allowing them to handle higher volumes of traffic efficiently.

Chetan Jaiswal

Infrastructure for a connected world

Both strategies aim to optimize video streaming by reducing delays, minimizing bandwidth waste and ensuring a seamless viewing experience for users worldwide.

The rapid expansion of the internet and the surge in video streaming – both live and on demand – have transformed how video content is delivered to users globally. However, the challenges of handling massive amounts of video data, reducing geographic latency and accommodating varying user devices and internet speeds require sophisticated solutions.

Content delivery networks have emerged as a cornerstone of modern streaming, enabling efficient and reliable delivery of video. This infrastructure supports the growing demand for high-quality video and highlights the innovative approaches needed to meet the expectations of a connected world.

![]()

Chetan Jaiswal does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.